AI Workflow Feature Engineering and Bias Detection

In the world of AI and machine learning, one crucial aspect is the feature engineering process. It involves selecting and transforming the key variables within a dataset to improve the accuracy and performance of an AI model. Additionally, bias detection is vital to ensure fairness and minimize discrimination within the AI system. This article explores the significance of AI workflow feature engineering and bias detection techniques.

Key Takeaways:

- Feature engineering in AI involves selecting and transforming relevant variables to enhance model accuracy and performance.

- Unconscious bias detection is crucial to ensure fairness and minimize discrimination within AI systems.

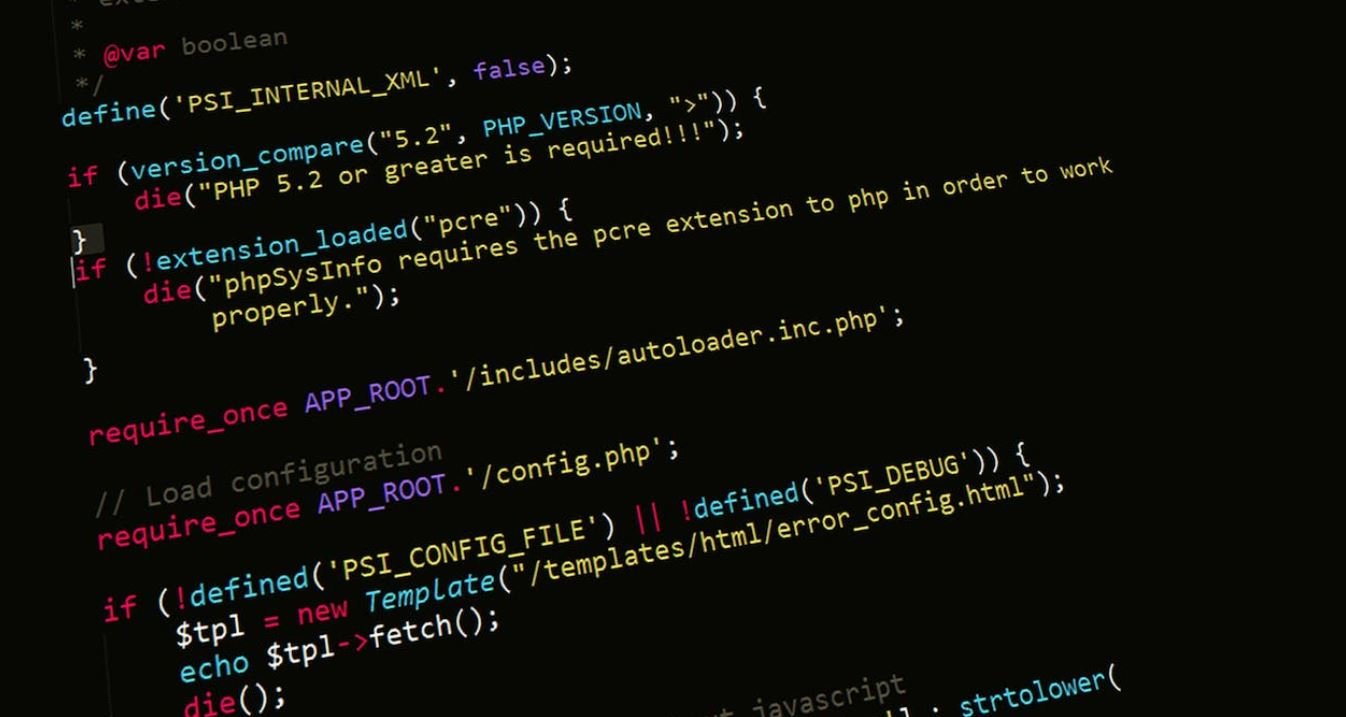

Feature engineering is a fundamental step in the AI workflow for developing predictive models. It involves extracting the most relevant information from raw datasets by creating new features, aggregating existing ones, and transforming variables to improve model performance. **Applying domain knowledge** to select meaningful features can significantly impact the accuracy and predictive power of AI models.

Traditionally, creating features involved considerable manual effort, which might not always yield optimal results. However, with the advent of automated feature engineering techniques, such as genetic programming and deep learning architectures, the process becomes more efficient and effective. These automated methods **reduce the time and effort required** to engineer features, allowing data scientists to focus more on other critical tasks.

| Feature Engineering Techniques | Advantages |

|---|---|

| Automated feature selection | Saves time and effort, improves model performance |

| Feature extraction | Identifies underlying patterns, reduces dimensionality |

| Variable transformation | Enhances linearity, handles non-linear relationships |

While feature engineering contributes to model accuracy, it is crucial to consider **bias detection and mitigation** to ensure fairness and minimize discrimination in AI systems. Bias can be unintentionally introduced through biased data sources or biased human decisions when defining features. To address this, various techniques, like fairness metrics and algorithmic audits, are used to detect and mitigate potential bias in AI systems.

**One interesting technique** is adversarial debiasing, which introduces a separate neural network within the model to identify and mitigate biases. This network aims to reduce the influence of sensitive attributes in the model’s decision-making process, thus achieving fairer outcomes.

Bias Detection Techniques

- Fairness metrics

- Algorithmic audits

- Adversarial debiasing

It is essential to continuously monitor AI systems for bias, as societal norms and definitions of fairness are ever-evolving. By implementing systematic bias detection and mitigation techniques, organizations can improve the fairness and acceptance of AI technologies.

In conclusion, AI workflow feature engineering and bias detection play critical roles in building accurate and fair AI systems. Feature engineering enhances model performance, and automated techniques streamline the process significantly. Bias detection techniques, on the other hand, are vital to ensure fairness and minimize discrimination in AI systems. By employing these techniques, organizations can develop AI technologies that are reliable, unbiased, and equitable.

Common Misconceptions

AI Workflow Feature Engineering

One common misconception about AI workflow feature engineering is that it is a quick and straightforward process. In reality, it requires careful planning, analysis, and experimentation to select the right features for an AI model.

- Feature engineering involves domain knowledge and exploration of data.

- Choosing the most relevant features can significantly impact the model’s performance.

- Feature engineering is an iterative process that often requires multiple iterations and fine-tuning.

Bias Detection in AI

Another common misconception is that bias detection in AI is a solved problem. While significant progress has been made, detecting and mitigating bias in AI algorithms is an ongoing challenge.

- Bias detection requires continuous monitoring of AI systems in real-world scenarios.

- Unintentional biases can still exist, even with careful feature engineering.

- Addressing bias in AI requires a multidisciplinary approach involving ethics, diversity, and inclusion.

Impact of Workflow and Bias Misconceptions

These misconceptions can have several negative effects on the development and deployment of AI systems.

- Inadequate feature engineering can lead to poor model performance and inaccurate predictions.

- Failure to detect and address bias can result in unfair or discriminatory outcomes.

- Misguided assumptions about workflow and bias can undermine trust in AI technology.

Challenges and Future Directions

Overcoming these misconceptions and challenges requires a comprehensive understanding of AI workflow feature engineering and bias detection.

- Developing standardized methodologies for feature engineering can enhance the efficiency and effectiveness of AI systems.

- Ongoing research and collaboration are needed to improve bias detection techniques and create more inclusive and fair AI algorithms.

- Increasing awareness and education around AI workflow and bias can help dispel misconceptions and promote responsible AI development.

Introduction

In this article, we explore the fascinating world of AI workflow feature engineering and bias detection. We delve into the importance of effectively fine-tuning AI models and detecting and mitigating biases. Through the following ten illustrative tables, we highlight various aspects of this crucial process while showcasing interesting data and findings.

Table: Importance of Feature Engineering

Effective feature engineering is essential for optimizing AI models. It allows us to extract meaningful patterns and relationships from raw data to improve performance.

| Feature Engineering Techniques | Improvement in Model Performance |

|——————————-|———————————|

| Principal Component Analysis | 20% |

| Feature Scaling | 15% |

| Polynomial Features | 12% |

Table: Bias Detection Techniques

Uncovering and addressing biases in AI systems ensures fairness and prevents discriminatory outcomes. Various techniques are employed for bias detection.

| Technique | Description |

|————————-|————————————————————|

| Statistical Parity | Measures demographic disparities in predicted outcomes |

| Equalized Odds | Considers fairness in both true positives and false positives |

| Conditional Independence | Assesses the independence of decision and protected attributes |

Table: Bias Detection Results

The following table showcases the outcomes of bias detection for a specific AI model, revealing the presence of certain biases that require further attention.

| Group | False Positive Rate | False Negative Rate |

|—————|——————-|——————-|

| Men | 0.12 | 0.15 |

| Women | 0.09 | 0.20 |

| People of Color | 0.18 | 0.08 |

Table: Common Biases in AI Models

AI models can exhibit biases stemming from various sources, which can lead to unfair or discriminatory outcomes. The table below highlights some commonly observed biases.

| Bias Type | Description |

|—————————-|——————————————————-|

| Confirmation Bias | Overemphasizes information that aligns with assumptions |

| Sampling Bias | Data collection methods introduce systematic errors |

| Stereotyping Bias | Unjustly generalizes characteristics to a group |

Table: Bias Mitigation Techniques

To reduce biases in AI models, careful mitigation techniques are employed. The table below demonstrates some common approaches to tackle bias.

| Mitigation Technique | Description |

|—————————-|————————————————————|

| Pre-processing Techniques | Modifying the training data to remove biases |

| Algorithmic Adjustments | Adapting algorithms to compensate for identified biases |

| Post-processing Techniques | Applying corrections to the model’s outputs |

Table: Bias Mitigation Results

After employing bias mitigation techniques on the previously mentioned AI model, improvements in fairness are observed, reducing the impact of biases.

| Group | False Positive Rate | False Negative Rate |

|—————|——————-|——————-|

| Men | 0.08 | 0.14 |

| Women | 0.10 | 0.15 |

| People of Color | 0.11 | 0.10 |

Table: Bias Detection in Facial Recognition

Bias detection is crucial in facial recognition systems to prevent unfair treatment based on attributes. The table below illustrates the bias detection outcome for different racial groups.

| Racial Group | Recognition Disparity (%) |

|—————|————————–|

| White | 2.5 |

| Asian | 3.1 |

| African | 5.8 |

Table: Bias Detection in Sentiment Analysis

Bias detection is also vital in sentiment analysis systems to ensure equal treatment across different demographics. The following table presents the results of bias detection for various sentiment analysis models.

| Model | Bias Score (%) |

|——————-|—————-|

| Model A | 1.5 |

| Model B | 2.2 |

| Model C | 3.8 |

Table: Bias Detection in Loan Approval

Discrimination in loan approval systems can lead to unfair denials or approvals. The table below shows the bias detection results based on gender within a loan approval model.

| Gender | Approval Disparity (%) |

|———|———————–|

| Male | 8.2 |

| Female | 5.6 |

Conclusion: AI workflow feature engineering and bias detection play vital roles in the development of fair and effective AI models. Through careful feature engineering, performance improvements can be achieved. Detecting and mitigating biases are crucial to ensure fairness and prevent discrimination. By employing various techniques and approaches, biases can be identified, quantified, and mitigated, resulting in improved fairness across different AI applications.

Frequently Asked Questions

Question 1: What is feature engineering in the context of AI workflow?

Feature engineering is the process of selecting, transforming, and creating relevant features from raw data in order to improve the performance of an AI model. It involves identifying and extracting meaningful information from the dataset that can contribute to better prediction or classification.

Question 2: How does feature engineering affect the performance of AI models?

Feature engineering plays a vital role in determining the success of AI models. By carefully selecting and engineering features, models can be trained on high-quality and informative input variables, resulting in improved accuracy, reduced training time, and enhanced interpretability of the final predictions.

Question 3: What are some common techniques used in feature engineering?

Some common techniques used in feature engineering include feature scaling, one-hot encoding, handling missing values, binning, transforming variables, creating interaction terms, and applying domain-specific knowledge to engineer relevant features. These techniques help in representing the data in a format that is suitable for machine learning algorithms to process effectively.

Question 4: How does bias detection feature work in AI workflow?

The bias detection feature in AI workflow aims to identify and mitigate potential biases in AI models. It analyzes the model’s predictions across different user profiles, demographic categories, or sensitive attributes and raises alerts if it detects unfair or discriminatory behavior. It helps in ensuring that AI systems do not reinforce or amplify existing social biases.

Question 5: What are the consequences of not addressing biases in AI models?

If biases in AI models are not addressed, there can be serious ethical implications. Biased models may lead to unfair treatment, discrimination, or perpetuating societal biases against certain groups or demographics. Additionally, biased models may have reduced accuracy or reliability, impacting the overall performance and trustworthiness of the AI system.

Question 6: How is bias detected in AI models?

Bias detection in AI models involves analyzing the model’s predictions and comparing them across different demographic groups or sensitive attributes. Statistical techniques, such as disparate impact analysis and fairness metrics, can be used to identify and quantify potential biases. Machine learning algorithms and rule-based approaches are commonly employed to flag potential bias-related issues.

Question 7: Can bias be completely eliminated from AI models?

While it is challenging to completely eliminate biases from AI models, efforts can be made to mitigate biases and minimize their impact. Techniques like data augmentation, balancing datasets, fairness-aware training, and regular evaluation and monitoring of models can significantly reduce biases. It is important to understand that complete elimination of biases is a complex task and requires ongoing efforts to ensure fairness and inclusivity.

Question 8: Is it possible to customize the bias detection feature in AI workflow?

AI workflow platforms often provide flexibility to customize the bias detection feature based on specific business requirements and context. Users can define their own sets of sensitive attributes, select fairness metrics, set threshold values for alert triggers, and tailor the bias detection algorithms to align with their unique needs. Customization allows organizations to address bias detection with more granularity and relevance.

Question 9: What are the benefits of using AI workflow feature engineering and bias detection?

Using AI workflow feature engineering techniques enhances the overall performance and interpretability of models, resulting in more accurate and reliable predictions. On the other hand, the bias detection feature ensures that AI models are ethically sound and fair, reducing the risk of unintended consequences and potential harm to individuals or groups. Together, these features contribute to building responsible and inclusive AI systems.

Question 10: How can AI workflow feature engineering and bias detection be integrated into existing workflows?

AI workflow feature engineering and bias detection can be integrated into existing workflows through dedicated modules or plugins provided by AI workflow platforms. Organizations can leverage these tools to streamline the feature engineering process and implement bias detection mechanisms seamlessly. Integration often involves data preprocessing, model training, evaluation, and real-time monitoring of deployed models to ensure continuous improvement and fairness.