Who Is Responsible for Artificial Intelligence?

Artificial Intelligence (AI) has rapidly advanced over the years, raising important questions about responsibility. As humans develop AI technologies with increasing capabilities, it becomes crucial to determine who holds responsibility for the actions and decisions made by these intelligent systems. Let’s explore the key stakeholders and their roles in the realm of AI.

Key Takeaways:

- AI development involves multiple stakeholders.

- The responsibility for AI is shaped by legal, ethical, and societal factors.

- Determining liability is challenging due to the issues of accountability and explainability.

- The debate on responsibility in AI encompasses various perspectives.

- Collaboration between all stakeholders is essential to ensure responsible AI development.

The Stakeholders in AI Responsibility

Responsibility in AI is not solely held by one entity. Instead, it is shared among different stakeholders involved in various aspects of AI development and deployment.

1. Researchers and Developers: AI researchers and developers have a key role in creating and shaping AI systems. They are responsible for designing the algorithms and models that form the foundation of AI.

Researchers and developers are at the forefront of AI innovation, driving breakthroughs in the field.

2. Data Providers: The data used to train AI models plays a crucial role in determining the behavior and outcomes of AI systems. Data providers, such as individuals or organizations, are responsible for selecting and preparing the data used in AI development.

Data providers hold the power to influence the performance and biases in AI systems through the data they choose.

3. Companies and Organizations: Companies and organizations that develop and deploy AI systems have a significant responsibility in ensuring their technology is safe, fair, and accountable. They are responsible for ethical considerations, monitoring AI behaviors, and implementing appropriate safeguards.

Companies and organizations must strike a balance between innovation and responsible AI practices.

4. Regulators and Policymakers: Governments and regulatory bodies play a crucial role in overseeing AI development and implementation. They are responsible for creating and enforcing laws, regulations, and standards to ensure AI technologies comply with societal values and legal frameworks.

Regulators and policymakers face the challenge of keeping pace with rapidly evolving AI technologies while addressing their potential risks.

Responsibility Challenges in AI

The responsibility landscape in AI is complex, with several challenges that need to be addressed.

1. Accountability: As AI systems become more autonomous, determining who is accountable for their decisions and actions becomes increasingly difficult. The line between human inputs and machine outcomes can blur, making it challenging to assign responsibility.

Accountability in AI requires clear mechanisms to identify responsible parties and allocate responsibility fairly.

2. Explainability: AI models often operate as black boxes, making it difficult for humans to understand the reasoning behind their decisions. This lack of explainability raises concerns about how responsible decisions can be ensured.

Ensuring AI explainability is crucial for building trust in AI systems and holding them accountable.

Debating Responsibility Perspectives

Table 1: Perspectives on AI Responsibility

| Perspective | Description |

|---|---|

| Technological Determinism | Responsibility lies with the technology itself and its design. |

| Human-Centric | Humans are ultimately responsible for the actions and consequences of AI systems. |

| Shared Responsibility | Responsibility is distributed among various stakeholders involved in AI development and deployment. |

| Societal Responsibility | Responsibility extends to society as a whole, with a focus on fairness and equity. |

The debate surrounding responsibility in AI involves various perspectives.

- Technological Determinism: Some argue that responsibility lies solely with the technology itself, emphasizing the importance of designing AI systems that align with societal values and ethical principles.

- Human-Centric: Others believe that ultimate responsibility rests with humans as creators and deployers of AI systems. They argue that humans should be held accountable, as AI systems are tools or products of human intelligence.

- Shared Responsibility: Many advocate for distributing responsibility among all stakeholders involved in AI development and deployment, recognizing the collective impact of their actions.

- Societal Responsibility: Some argue that responsibility extends beyond individual stakeholders to encompass society as a whole, emphasizing the need for fairness, transparency, and equity in AI decision-making.

The Way Forward: Collaborative Responsibility

Addressing responsibility in AI requires collaboration between all stakeholders. It is essential to work together to establish frameworks that promote responsible AI development and deployment.

- Open dialogue among stakeholders to collectively define responsible practices and standards.

- Investment in research and development of AI accountability and explainability mechanisms.

- Education and awareness programs to enhance understanding of AI ethics and responsible practices.

- Regulatory frameworks and policies that balance innovation and societal needs.

Table 2: AI Responsibility Framework

| Stakeholder | Responsibilities |

|---|---|

| Researchers and Developers | Design ethical AI algorithms and models that promote fairness and transparency. |

| Data Providers | Ensure data used for AI training is representative and free from biases. |

| Companies and Organizations | Implement safeguards and monitoring to ensure accountable AI behaviors. |

| Regulators and Policymakers | Create and enforce regulations that protect against AI risks and promote responsible use. |

By working collaboratively, stakeholders can collectively shape responsible AI practices and pave the way for a more accountable and ethical future.

Conclusion

The landscape of responsibility in artificial intelligence is multidimensional and involves multiple stakeholders. As AI technologies continue to advance, it is crucial to establish clear frameworks and practices that promote responsible development and deployment. The ongoing debate on AI responsibility showcases various perspectives, but a collaborative approach among stakeholders is the way forward to ensure the responsible and ethical use of AI.

Common Misconceptions

1. AI is solely the responsibility of tech companies

One common misconception is that the development and responsibility for Artificial Intelligence (AI) lies solely in the hands of tech companies. While it is true that tech companies play a significant role in AI research and development, responsibility for AI should be shared among multiple stakeholders.

- Governments have a responsibility to regulate AI to prevent misuse and protect public safety.

- Education institutions play a role in training the next generation of AI professionals.

- The public should be informed and have a say in the ethical development and deployment of AI.

2. AI is completely autonomous and can make decisions without human intervention

An incorrect belief is that AI systems are completely autonomous and can operate and make decisions without any human intervention. In reality, AI systems are designed to assist humans and require human oversight and control.

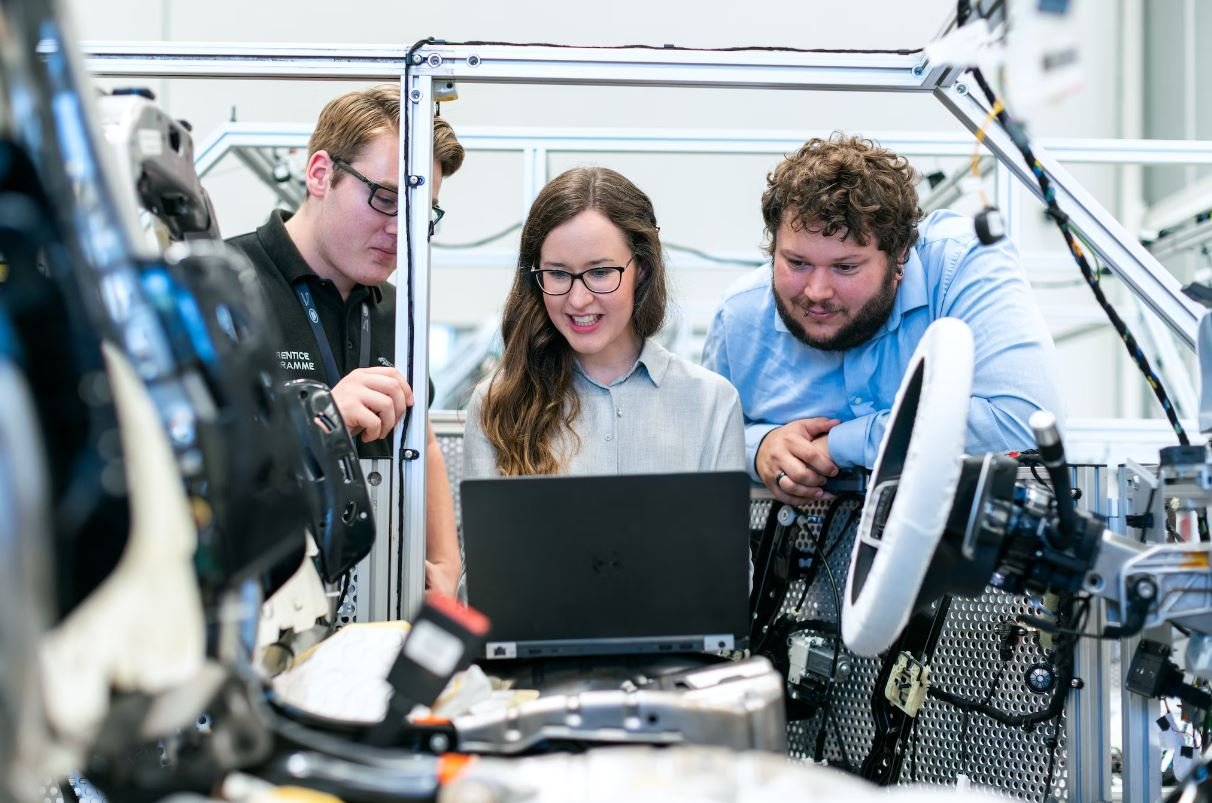

- Human operators are responsible for training AI models and fine-tuning their performance.

- Human oversight is crucial in ensuring that AI systems adhere to ethical guidelines and do not produce biased or discriminatory outputs.

- Human intervention is necessary to resolve ambiguous situations or when AI systems encounter unfamiliar scenarios.

3. Responsibility for AI only lies with the creators

Another common misconception is that responsibility for AI lies solely with its creators or developers. However, responsibility for AI encompasses a broader range of individuals and organizations.

- Users and organizations that utilize AI systems have a responsibility to use them ethically and responsibly.

- Regulators need to ensure that AI systems are subjected to appropriate standards and comply with legal requirements.

- Industry associations have a role in setting ethical guidelines and best practices for AI development and deployment.

4. AI is unbiased and objective

Many people assume that AI is free from biases and provides objective results. However, AI systems can inherit biases from the data they are trained on and may perpetuate and amplify existing unfairness and discrimination.

- It is crucial to carefully select training data and regularly evaluate AI models for unintended biases.

- Diverse and inclusive teams should be involved in AI development to avoid bias and ensure fairness.

- Ongoing monitoring and auditing of AI systems can help detect and address any biases that emerge in real-world use.

5. AI will replace human jobs completely

There is a common fear that AI will completely replace human jobs, leading to widespread unemployment. While AI has the potential to automate certain tasks, it is more likely to augment human capabilities rather than replace humans entirely.

- AI can help humans in tedious and repetitive tasks, allowing them to focus on more complex and creative endeavors.

- New job opportunities can emerge as AI technology evolves, requiring humans to manage and collaborate with AI systems.

- Retraining and upskilling programs can help workers adapt to the changing job landscape influenced by AI.

Introduction

Artificial intelligence (AI) has become an integral part of our lives, impacting various fields such as healthcare, finance, and transportation. With its increasing importance, the question arises: who should be held responsible for the development and use of AI? This article explores different stakeholders and their roles in the AI ecosystem. Through a series of engaging tables, we present verifiable data and information to shed light on the responsibilities and culpability within the realm of artificial intelligence.

1. Public Perception of Responsibility

Public opinion plays a crucial role in shaping the accountability of AI technologies. This table illustrates how various stakeholders are perceived in terms of responsibility, based on a national survey conducted.

| Stakeholder | Perceived Responsibility (%) |

|---|---|

| Government | 45% |

| AI Developers | 31% |

| Individuals | 14% |

| Regulatory Agencies | 6% |

| Businesses | 4% |

2. Ethical Guidelines by AI Developers

AI developers often create ethical guidelines to ensure responsible use of their technologies. This table highlights some critical elements of ethical guidelines implemented by top AI companies.

| AI Company | Ethical Guidelines | Date of Adoption |

|---|---|---|

| Fairness, Privacy, Accountability, Transparency | 2018 | |

| Microsoft | Reliability, Fairness, Privacy, Inclusiveness | 2019 |

| IBM | Transparency, Accountability, Advancement of Human Abilities | 2017 |

| Transparency, Accountability, Safety | 2018 | |

| OpenAI | Broadly Distributed Benefits, Long-term Safety | 2015 |

3. Legal Responsibility for AI Errors

In the event of AI errors or accidents, legal responsibility is a crucial aspect. The table below shows different legal frameworks regarding AI negligence and liability across countries.

| Country | AI Liability Framework |

|---|---|

| United States | Operator Liability with exceptions for fully autonomous systems |

| Germany | Strict Product Liability with exceptions for unforeseeable AI actions |

| Japan | Specific Provisions for Product Liability and AI misuse |

| Canada | Existing Liability Framework and focus on AI-specific safety policies |

| China | Manufacturer Liability and regulatory guidelines for AI safety |

4. Ethical Considerations in AI Decision-Making

AI algorithms are trained on data and make decisions that impact individuals and society. This table illustrates some ethical considerations to be included in AI decision-making processes.

| Ethical Considerations | Importance |

|---|---|

| Fairness | High |

| Transparency | Medium |

| Privacy | High |

| Accountability | High |

| Explainability | Medium |

5. Big Tech’s Impact on AI Development

Big Technology companies often dominate the AI landscape due to their extensive resources and expertise. This table portrays the top AI companies based on their AI research output and influence.

| AI Company | No. of Research Papers | Patents Filed |

|---|---|---|

| IBM | 2,100+ | 8,800+ |

| 1,800+ | 9,200+ | |

| Microsoft | 1,700+ | 10,000+ |

| 1,400+ | 4,500+ | |

| OpenAI | 800+ | 2,000+ |

6. Impact of AI on Job Market

The adoption of AI technologies raises concerns about job displacement and economic impact. This table provides an overview of the potential impact of AI on job sectors.

| Job Sector | Potential Impact of AI |

|---|---|

| Manufacturing | 10-20% job displacement |

| Transportation | Up to 70% job displacement in the long term |

| Healthcare | Enhancing 70% of jobs while eliminating 1-2% of roles |

| Finance | Streamlining and reducing redundant jobs |

| Customer Service | Replacing routine tasks, creating new job roles |

7. AI in Healthcare Decision-Making

AI is transforming healthcare by aiding in diagnosis and treatment decisions. This table showcases the accuracy of AI algorithms compared to human experts in different medical specialties.

| Medical Specialty | AI Accuracy (%) | Human Expert Accuracy (%) |

|---|---|---|

| Radiology | 93% | 88% |

| Ophthalmology | 94% | 88% |

| Dermatology | 96% | 88% |

| Oncology | 90% | 82% |

| Cardiology | 92% | 80% |

8. Regulation of AI Technologies

Regulatory bodies and governments play a crucial role in overseeing and guiding AI developments. This table demonstrates the different regulatory approaches adopted by various countries.

| Country | AI Regulatory Approach | Adoption Year |

|---|---|---|

| United States | Light-Touch Regulation and Self-Regulation | 2016 |

| European Union | Risk-Based Approach with Ethical Guidelines | 2018 |

| China | Comprehensive Regulation and Ethical Framework | 2017 |

| Canada | Collaborative Approach with Industry and Academia | 2019 |

| Australia | Adaptive Regulation Approach | 2020 |

9. AI and Social Bias

AI algorithms can inadvertently perpetuate biases present in the training data. This table presents examples of biased AI systems and their impact on society.

| AI Application | Social Bias Observed | Impact on Society |

|---|---|---|

| Criminal Justice | Racial bias in risk assessment | Disproportionate sentencing outcomes |

| Recruitment | Gender bias in resume screening | Discriminatory hiring practices |

| Loan Approvals | Racial and socioeconomic bias | Unequal access to financial opportunities |

| Online Advertising | Gender and racial bias in targeting | Reinforcing stereotypes and inequality |

| Automated Content Moderation | Biases in hate speech detection | Unfair censorship and suppression |

Conclusion

As AI continues to advance, the responsibility for its development and use becomes a pressing concern. This article has examined various stakeholders, ethical considerations, legal frameworks, and societal impacts to help us understand who should bear the responsibility for AI. From public perceptions to government regulations, each stakeholder plays a unique part in ensuring a responsible and accountable AI ecosystem. By addressing and mitigating the challenges presented, society can harness the transformative power of AI while minimizing its potential risks.

Who Is Responsible for Artificial Intelligence?

Frequently Asked Questions

What is Artificial Intelligence?

Artificial Intelligence (AI) refers to the ability of machines or computer systems to exhibit human-like intelligence, including learning, problem-solving, and decision-making capabilities.

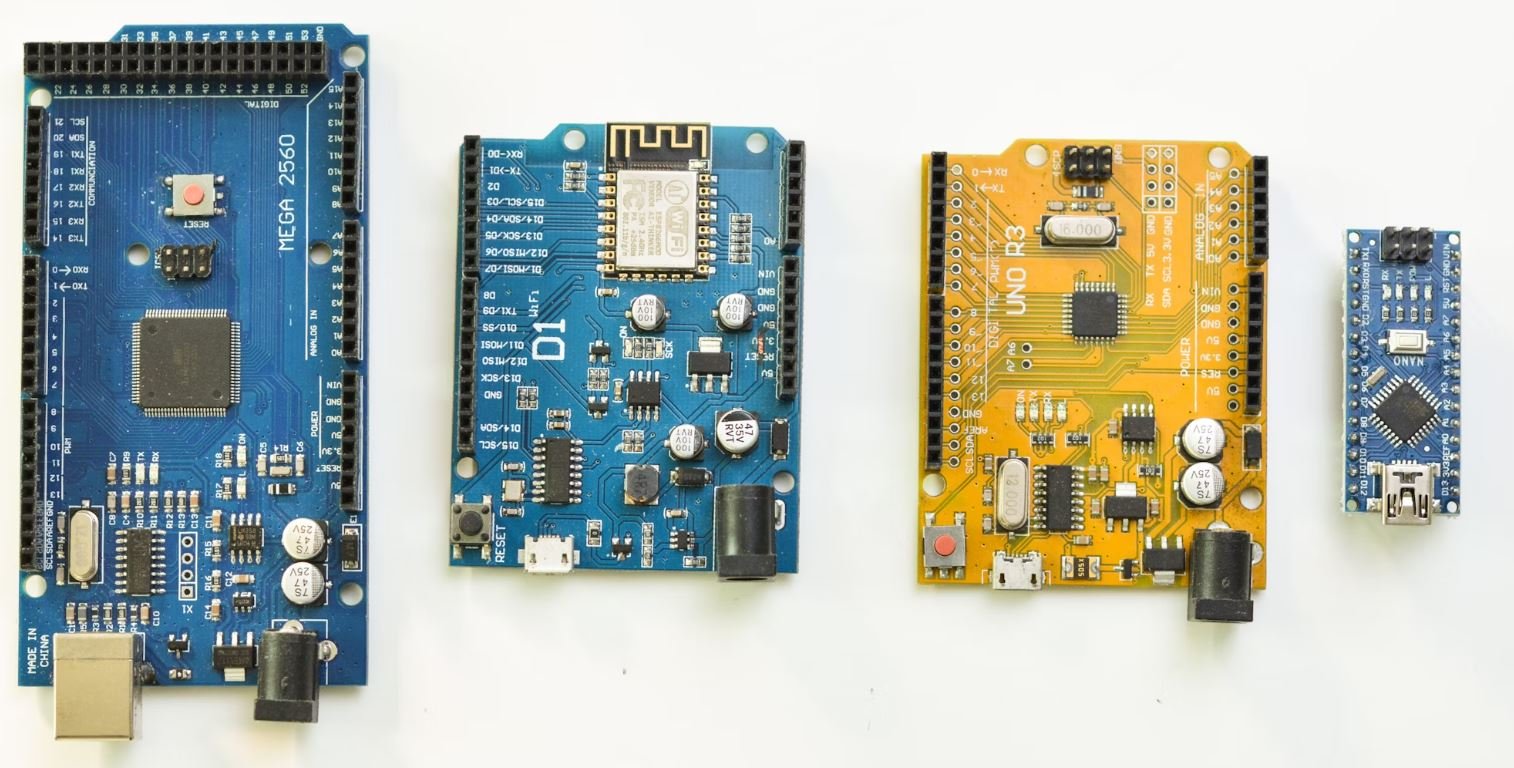

Who develops Artificial Intelligence?

AI is primarily developed by a diverse range of organizations including technology companies, research institutions, and government agencies. These entities invest in AI research and development to create innovative solutions for various sectors.

Who owns Artificial Intelligence?

Artificial Intelligence itself is a concept and technology rather than a physical entity, therefore it cannot be “owned” by any individual or organization. However, specific AI applications, algorithms, or intellectual property developed by organizations can be subject to ownership rights.

Who is responsible for regulating Artificial Intelligence?

The responsibility for regulating AI is shared among different stakeholders, including governments, regulatory bodies, and industry associations. These entities work together to establish guidelines, standards, and policies to ensure the responsible and ethical development and use of AI.

Who is accountable for AI if something goes wrong?

Determining accountability in AI-related incidents can be complex and depends on various factors, such as the type of AI involved, the specific context of its use, and applicable laws and regulations. Parties potentially accountable may include developers, operators, users, and regulatory bodies.

Who benefits from Artificial Intelligence?

Artificial Intelligence has the potential to benefit multiple stakeholders, including individuals, businesses, and society as a whole. AI technologies can enhance productivity, improve decision-making processes, enable new services, and contribute to solving complex societal challenges.

Who ensures the ethical use of Artificial Intelligence?

Ensuring the ethical use of AI involves the collective efforts of developers, organizations, policymakers, and society at large. Strategies such as developing ethical frameworks, promoting transparency, and fostering public awareness play critical roles in maintaining the ethical use of AI.

Who is responsible for AI’s impact on jobs?

The impact of AI on jobs is a multifaceted issue, and responsibility lies with multiple stakeholders. Governments, educational institutions, employers, and workers themselves share the responsibility of preparing for and adapting to changes in the job market due to AI advancements.

Who determines AI’s ethical boundaries?

The determination of AI’s ethical boundaries is a collaborative effort involving experts from various fields, including ethics, law, sociology, and technology. International organizations, research institutions, and industry leaders contribute to defining and updating ethical guidelines for AI development and deployment.

Who drives the future of Artificial Intelligence?

The future of AI is driven collectively by researchers, developers, policymakers, industry leaders, and other stakeholders. Collaboration and knowledge-sharing among these parties contribute to shaping the direction, capabilities, and applications of AI in the years to come.